Data annotation has become the backbone of artificial intelligence development, serving as the critical bridge between raw data and intelligent machine learning models. As AI systems become increasingly sophisticated, the demand for high-quality annotated data continues to surge across industries worldwide.

What is Data Annotation?

Data annotation is the process of labeling or tagging data to make it recognizable and usable by machine learning algorithms. Think of it as teaching a computer to understand the world the way humans do, by providing clear, consistent examples of what things are and how they relate to each other.

When you ask “what is a data annotation”, the simplest answer is that it’s a label, tag, or markup applied to raw data. These annotations serve as ground truth for training AI models, helping them learn to identify patterns, make predictions, and perform tasks autonomously. From identifying objects in images to transcribing speech to text, data annotation enables machines to learn from human expertise and judgment.

What is a data annotator?

A data annotator is a professional who carefully reviews data and applies accurate labels according to specific guidelines and standards. These individuals combine attention to detail with domain expertise to ensure AI models receive the highest quality training data possible.

The importance of data annotation cannot be overstated in 2026. As generative AI, autonomous vehicles, medical diagnostics, and countless other AI applications advance, the need for precisely annotated datasets has become mission-critical. Without properly annotated data, even the most sophisticated algorithms cannot learn effectively or produce reliable results.

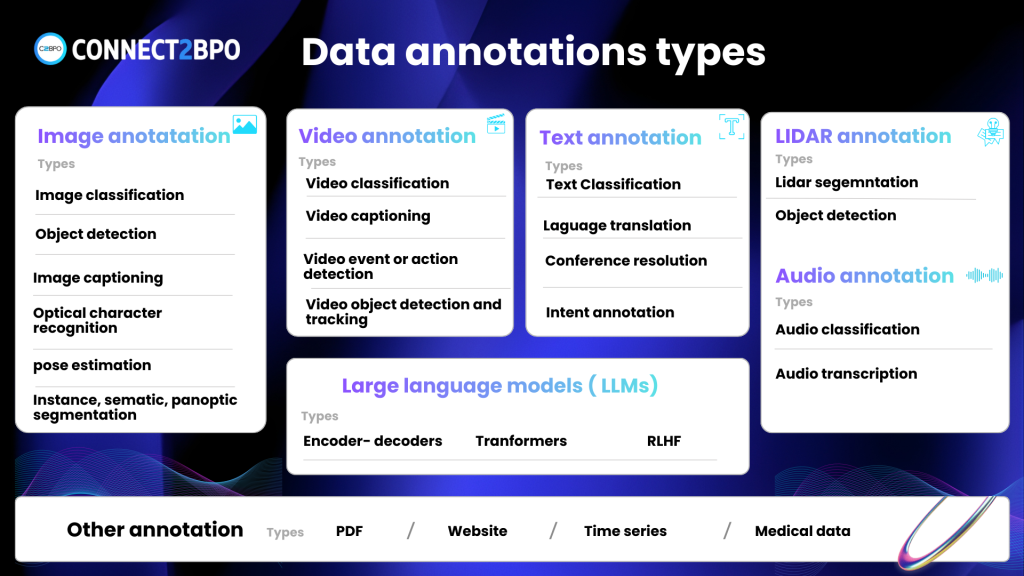

Types of Data Annotation

The field of data annotation encompasses multiple specialized disciplines, each designed to handle different data modalities and use cases. Understanding these various types helps organizations choose the right approach for their specific AI projects.

Large Language Models (LLM) Annotation

LLM annotation has emerged as one of the most rapidly growing segments in the data annotation industry. This specialized form of annotation involves evaluating, ranking, and refining the outputs of large language models to improve their performance and alignment with human values.

LLM annotation typically includes several key activities. Human annotators assess model responses for accuracy, helpfulness, harmlessness, and honesty. They compare multiple responses to the same prompt and rank them by quality. Annotators also identify instances where models produce harmful, biased, or factually incorrect content, helping developers understand failure modes and edge cases.

The work extends to reinforcement learning from human feedback (RLHF), where annotators provide detailed feedback that shapes model behavior over time. This includes evaluating conversational coherence, factual accuracy, reasoning capabilities, and adherence to ethical guidelines. As an AI data annotation jobs category, LLM annotation requires strong language skills, critical thinking abilities, and often domain-specific expertise.

Red-teaming has also become an essential component of LLM annotation. Annotators deliberately attempt to elicit problematic responses from models, helping developers identify vulnerabilities and improve safety measures. This adversarial approach to data annotation ai has become standard practice for responsible AI development.

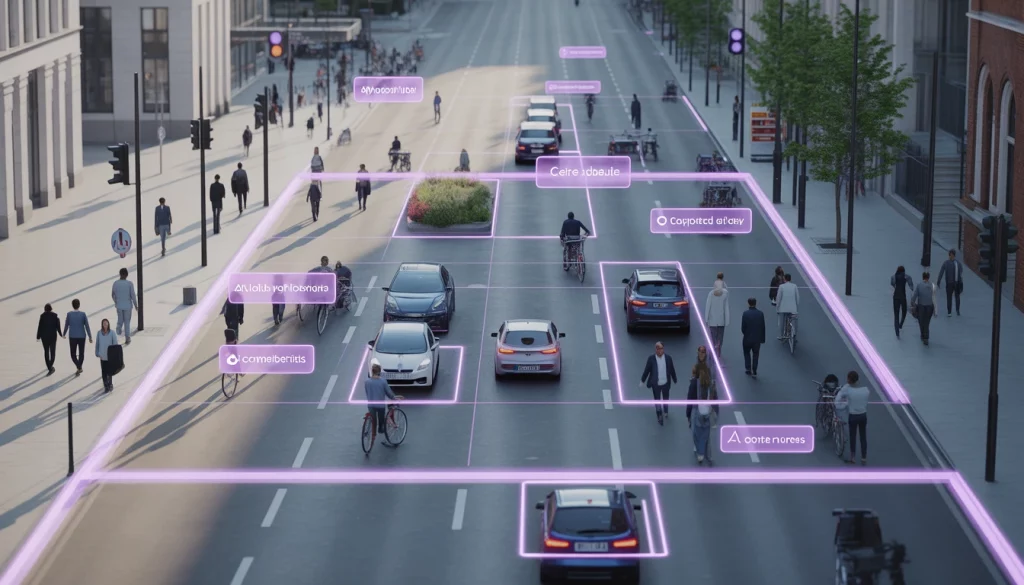

Image Annotation

Image annotation involves labeling visual elements within photographs, illustrations, or other static images. This foundational form of ai data annotation powers computer vision applications ranging from autonomous vehicles to medical imaging diagnostics.

Common image annotation techniques include bounding boxes, where annotators draw rectangular frames around objects of interest; polygon annotation for irregular shapes requiring precise boundaries; semantic segmentation, which involves labeling every pixel in an image according to its class; and keypoint annotation for identifying specific points of interest, such as facial landmarks or body joints.

In 2026, image annotation has evolved to handle increasingly complex scenarios. Annotators now work with multi-class labeling where single images contain dozens of object categories, overlapping objects that require careful boundary definition, and contextual relationships between objects. For instance, autonomous vehicle training requires not just identifying pedestrians, vehicles, and traffic signs, but understanding their relationships and potential interactions.

Medical image annotation represents a particularly specialized domain, where trained professionals label CT scans, MRIs, X-rays, and pathology slides. These annotations help AI systems detect diseases, identify anatomical structures, and assist in diagnosis with potentially life-saving accuracy.

Video Annotation

Video annotation extends image annotation across the temporal dimension, adding complexity and richness to the labeling process. What is data annotation in video contexts? It’s the frame-by-frame or segment-based labeling of moving visual content, tracking objects and actions through time.

Video annotation includes object tracking, where items are followed continuously across frames; action recognition, which labels specific behaviors or events; temporal segmentation, dividing videos into meaningful segments; and scene classification, categorizing entire video sequences by content or context.

The challenges of video annotation are substantial. Annotators must maintain consistency across thousands of frames while objects change appearance, become occluded, or move in and out of frame. For applications like sports analytics, surveillance systems, or entertainment content moderation, precise video annotation is essential.

Emerging video annotation applications in 2026 include annotating augmented reality content, labeling videos for recommendation systems, identifying unsafe content for platform moderation, and creating training data for robotics systems that must understand dynamic environments.

Text Annotation

Text annotation encompasses a broad range of labeling tasks applied to written content. This form of data annotation services supports natural language processing (NLP) applications including chatbots, sentiment analysis, information extraction, and machine translation.

Key text annotation types include named entity recognition (NER), where annotators identify and classify names, locations, organizations, dates, and other entities; sentiment annotation, labeling emotional tone as positive, negative, or neutral; intent classification, determining the purpose behind a statement or query; and relationship extraction, identifying how entities relate to one another within text.

Text annotation has grown more sophisticated with the rise of large language models. Modern text annotation projects often involve nuanced tasks like identifying factual claims for verification, labeling argumentative structures, annotating discourse relations between sentences, and marking text for bias, toxicity, or misinformation.

For multilingual AI systems, text annotation must account for linguistic and cultural variations. What is data annotation job responsibilities in text projects? Annotators must possess strong language skills, cultural awareness, and the ability to apply complex annotation schemas consistently across large document collections.

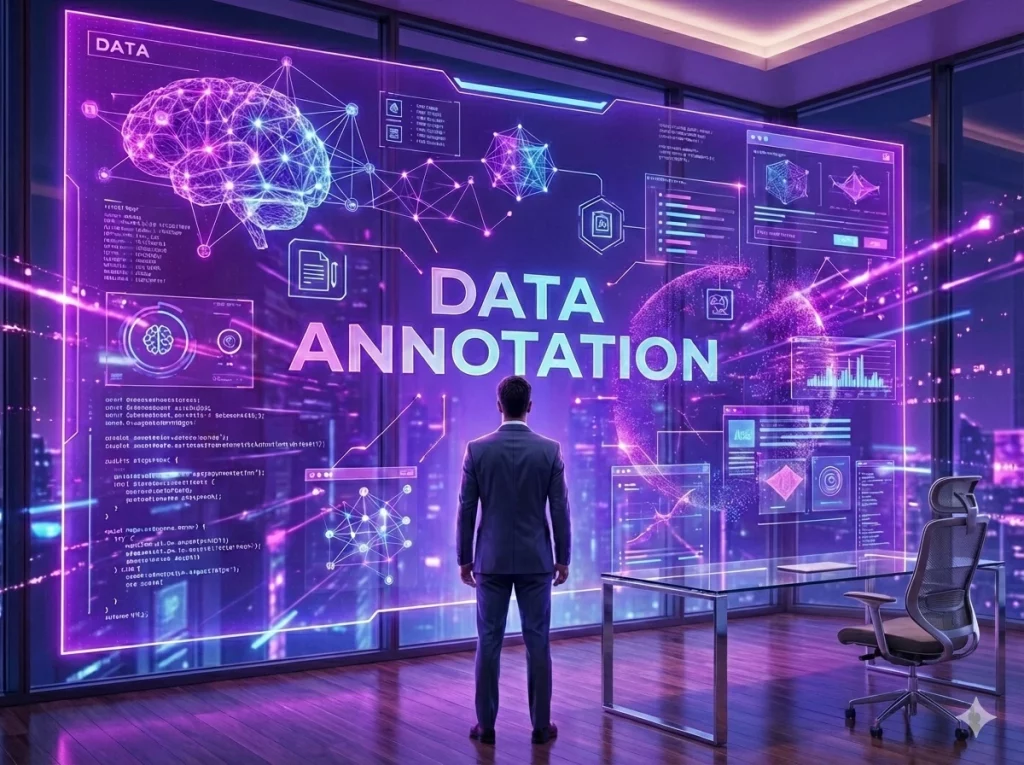

Audio Annotation

Audio annotation involves labeling sound recordings for speech recognition, audio event detection, speaker identification, and music information retrieval systems. This specialized field requires both technical precision and domain expertise.

Primary audio annotation tasks include speech transcription, converting spoken words to written text with careful attention to speaker turns, timestamps, and non-verbal sounds; speaker diarization, identifying who spoke when in multi-speaker recordings; audio event labeling, marking specific sounds like alarms, crashes, or ambient noise; and acoustic scene classification, categorizing the environment where recording occurred.

Advanced audio annotation in 2026 includes emotion recognition from voice, dialect and accent identification, music genre and mood tagging, and acoustic quality assessment. For voice assistant development, annotators evaluate not just transcription accuracy but also the appropriateness of system responses to voice commands.

The challenges of audio annotation include handling poor audio quality, overlapping speakers, background noise, and diverse accents or speaking styles. Professional audio annotators often use specialized software that displays waveforms and spectrograms alongside playback controls, enabling precise temporal labeling.

LiDAR Annotation

LiDAR (Light Detection and Ranging) annotation has become indispensable for autonomous vehicle development and robotics applications. LiDAR sensors create three-dimensional point clouds representing the physical environment, and annotators must label these complex 3D structures.

LiDAR annotation involves 3D bounding box placement around objects in point cloud data, semantic segmentation where each point receives a class label, ground plane estimation, and tracking objects across sequential LiDAR frames to understand motion and trajectory.

The complexity of LiDAR annotation requires specialized tools and training. Annotators work in three-dimensional space, rotating viewpoints and adjusting perspectives to ensure accurate labeling. They must distinguish between vehicles, pedestrians, cyclists, infrastructure elements, and vegetation in data that can be sparse, noisy, or incomplete.

In 2026, multi-sensor fusion annotation has become common, where annotators work simultaneously with LiDAR, camera images, and radar data to create comprehensive labeled datasets. This approach, combining multiple data modalities, produces richer training data for perception systems that must operate safely in complex real-world environments.

Data Annotation Tech

Data annotation tech refers to the platforms, tools, and software solutions that facilitate the annotation process. These technologies have evolved dramatically to meet the growing demands for scale, accuracy, and efficiency in AI training data production.

Modern data annotation platforms offer comprehensive feature sets including intuitive annotation interfaces with specialized tools for each data type, quality control mechanisms with multi-tier review processes, project management capabilities for coordinating teams, automated pre-labeling using AI assistance, and detailed analytics and reporting dashboards.

AI-powered data annotation technologies efficiency accuracy have improved substantially through machine learning assistance. These systems use existing models to provide preliminary annotations that human annotators refine and correct. This human-in-the-loop approach dramatically accelerates annotation while maintaining high quality standards.

Leading data annotation platforms in 2026 incorporate advanced capabilities like active learning, where systems identify the most valuable data points to annotate next; consensus mechanisms that require multiple annotators to agree before finalizing labels; version control for tracking annotation changes over time; and API integrations that connect annotation workflows seamlessly with model training pipelines.

The technology stack extends beyond annotation interfaces to include data management systems for organizing massive datasets, collaboration tools for distributed annotation teams, security infrastructure to protect sensitive data, and quality assurance algorithms that detect annotation errors or inconsistencies automatically.

Cloud-based annotation platforms have become the standard, offering scalability, accessibility, and reduced infrastructure costs. Organizations can spin up annotation projects rapidly, scale workforces up or down based on project needs, and access their data and annotations from anywhere in the world.

Is Data Annotation Tech Legit?

A common question among those exploring the industry is: is data annotation tech legit? The answer is definitively yes, data annotation has evolved into a legitimate, multi-billion dollar industry that underpins the entire artificial intelligence ecosystem.

When people ask “is data annotation legit,” they’re often concerned about job opportunities, platform reliability, or the business model’s sustainability. Data annotation represents a genuine economic sector with established players, recognized standards, and clear value propositions for both workers and enterprises.

Major technology companies including Google, Amazon, Microsoft, Meta, and Apple all maintain substantial data annotation operations. Google data labeling and annotation services power search, advertising, and AI products used by billions. These companies have invested heavily in annotation infrastructure because quality training data is essential to their competitive advantage.

The legitimacy of data annotation tech is further evidenced by the emergence of specialized companies dedicated exclusively to annotation services. These firms employ thousands of annotators, maintain rigorous quality standards, and serve clients across industries. Their sustained growth and profitability demonstrate that data annotation services address real market needs.

However, the question “is data annotation a scam” does arise because, like any industry, data annotation includes both reputable operators and questionable actors. Legitimate data annotation companies provide clear terms of service, transparent payment structures, reasonable quality standards, reliable communication and support, and appropriate data security measures.

Warning signs of potentially problematic platforms include requests for upfront payments from workers, unrealistic earning promises, lack of clear company information or contact details, poor data security practices, and consistently negative data annotation reviews from former workers or clients.

For those seeking AI data annotation jobs, researching companies thoroughly is essential. Look for established firms with verifiable business operations, read data annotation reviews from multiple sources, verify payment histories through worker communities, and understand the terms of engagement before committing time and effort.

The data annotation industry has matured considerably, developing professional standards, ethical guidelines, and quality frameworks. Organizations like academic institutions and industry consortia have published best practices for annotation projects, addressing issues like fair compensation, worker rights, data privacy, and annotation quality assurance.

Data Annotation Jobs and AI Data Annotation Jobs

The job market for data annotators has expanded tremendously, offering diverse opportunities for individuals with varying skill levels and backgrounds. AI data annotation jobs represent a growing segment of the global workforce, providing flexible employment options and pathways into the technology sector.

What is data annotation job scope and requirements? Entry-level positions typically require attention to detail, basic computer skills, reliable internet access, and the ability to follow detailed guidelines. More specialized roles may require domain expertise in fields like medicine, law, or engineering, language proficiency for multilingual projects, or technical skills for complex annotation types like LiDAR or video tracking.

AI data annotation jobs remote opportunities have proliferated, enabling workers worldwide to participate in annotation projects. This geographic flexibility has created employment opportunities in regions with limited traditional job markets while giving companies access to global talent pools with diverse perspectives and expertise.

Common data annotation job roles include general annotators who handle straightforward labeling tasks, quality assurance specialists who review and verify annotations, project managers who coordinate annotation teams and ensure deliverables meet specifications, annotation tool developers who build and maintain annotation platforms, and data annotation ai trainer positions that involve providing specialized feedback to AI models.

Compensation for data annotation work varies significantly based on task complexity, required expertise, geographic location, and employment model. Entry-level tasks might pay modest hourly rates, while specialized annotation requiring domain expertise can command substantially higher compensation. Full-time positions with benefits have become more common as the industry professionalizes.

The career trajectory for data annotators has evolved beyond simple labeling work. Experienced annotators can advance to quality assurance roles, project management positions, or specialized annotation trainer roles where they develop guidelines and train other annotators. Some transition into AI development roles, leveraging their deep understanding of training data requirements.

For those seeking AI data annotation jobs remote, numerous platforms connect workers with annotation projects. These include established crowdsourcing platforms with diverse task offerings, specialized annotation companies focusing exclusively on data labeling, and direct employment by technology companies building AI systems. Each model offers different advantages in terms of flexibility, stability, and earning potential.

The skills developed through annotation work are increasingly valuable in the broader AI ecosystem. Annotators gain understanding of machine learning workflows, develop critical evaluation skills, learn to work with diverse data types, and build familiarity with AI capabilities and limitations. These competencies can serve as stepping stones to more advanced roles in AI development, testing, and product management.

Data Annotation Service Providers

Organizations seeking to build AI systems typically choose between in-house annotation, crowdsourcing, or partnering with professional data annotation service providers. Each approach offers distinct advantages depending on project requirements, budget constraints, and quality standards.

Professional data annotation service provider companies offer comprehensive solutions including managed annotation workforces with trained specialists, robust quality assurance processes with multi-tier review, secure data handling infrastructure meeting industry standards, scalable capacity to handle projects of any size, and domain-specific expertise for specialized applications.

Human-powered data annotation services remain essential despite advances in automation. Human judgment is irreplaceable for tasks requiring contextual understanding, nuanced interpretation, ethical considerations, or handling of edge cases that algorithms struggle to process. Human-powered data annotation service providers emphasize quality over pure speed, recognizing that training data accuracy directly impacts model performance.

Leading data annotation services differentiate themselves through several factors. Workforce quality is paramount, companies with rigorous annotator selection, comprehensive training programs, and retention strategies consistently deliver superior results. Quality assurance infrastructure, including statistical sampling, inter-annotator agreement metrics, and expert review layers, ensures annotation reliability.

Domain specialization has become a key competitive advantage for data annotation service providers. Medical annotation requires healthcare professionals who understand anatomy and pathology. Legal document annotation needs lawyers or paralegals familiar with legal terminology. Autonomous vehicle annotation demands understanding of traffic rules and driving contexts. Providers with deep expertise in specific domains can deliver annotations that general-purpose platforms cannot match.

The data annotation service landscape includes several provider categories. Enterprise annotation platforms serve large technology companies with massive, ongoing annotation needs. These providers offer dedicated teams, custom tools, and seamless integration with client workflows. Vertical-specific annotators focus on particular industries like healthcare, automotive, or finance, developing specialized expertise and tools for those domains.

Crowdsourcing platforms provide access to large, distributed workforces capable of rapidly scaling annotation projects. While offering speed and cost advantages, crowdsourcing requires robust quality control since workers typically receive less training and oversight than managed teams. Hybrid models combining crowdsourcing with expert review have emerged as an effective compromise.

When evaluating data annotation services, organizations should assess several critical factors. Security and privacy controls protect sensitive training data throughout the annotation process. Scalability ensures providers can grow capacity to meet increasing demands. Turnaround time impacts development velocity and time-to-market. Cost structure should balance budget constraints with quality requirements. And client support quality determines how smoothly projects execute and how effectively issues get resolved.

The relationship between clients and data annotation service providers has evolved toward true partnerships. Rather than purely transactional engagements, leading providers work closely with clients to understand model requirements, refine annotation guidelines iteratively, and optimize annotation schemas for specific use cases. This collaborative approach produces better outcomes than treating annotation as a commodity service.

How Long Does Data Annotation Take to Respond?

A practical concern for prospective annotators is: how long does data annotation take to respond when applying for positions? Response times vary considerably depending on the platform or company, current demand for annotators, and the application’s completeness.

For established data annotation platforms, initial application responses typically arrive within a few days to two weeks. However, how long does data annotation take to accept you into their workforce? The full onboarding process, including application review, assessment tests, training completion, and first task assignment, can range from one week to over a month.

Several factors influence response timeframes. Project demand fluctuates, when companies have active projects requiring annotators, hiring accelerates dramatically. During slower periods, applications may sit longer before review. Application quality matters significantly. Complete applications with relevant experience, clear communication, and demonstrated attention to detail receive priority consideration. Assessment performance determines progression speed. Most platforms require passing qualification tests before granting access to paid tasks. Strong performance expedites acceptance while marginal scores may require retesting or additional training.

The competitive nature of data annotation positions means not all applicants receive acceptance. Platforms maintain quality standards and manage workforce size relative to available work. Applicants should not interpret delays necessarily as rejections, many platforms maintain applicant pools and reach out when capacity needs increase.

For those asking how long does data annotation take to accept you, setting realistic expectations helps. Apply to multiple platforms simultaneously to increase odds of timely acceptance. Complete applications thoroughly and carefully, demonstrating the attention to detail essential for annotation work. Respond promptly to any communication from platforms, showing reliability and professionalism. Pass qualification tests on first attempts by reviewing guidelines carefully and taking time with assessments.

Once accepted, initial task availability may be limited as annotators build reputation and demonstrate consistent quality. Most platforms use performance metrics to allocate tasks, giving priority to annotators with proven track records. New workers should expect a ramp-up period where they establish credibility through high-quality work before accessing higher-volume or better-compensated tasks.

Communication responsiveness varies by platform. Some maintain active support channels with quick response times, while others operate more autonomously with limited direct communication. Understanding each platform’s communication norms helps set appropriate expectations and navigate the worker experience effectively.

Data Annotation Outsourcing Services

Data annotation outsourcing services have become the preferred approach for many organizations developing AI systems. Rather than building internal annotation capabilities from scratch, companies leverage specialized providers to handle the complex, labor-intensive process of creating training data.

The decision to pursue data annotation outsourcing services stems from several strategic considerations. Core competency focus allows companies to concentrate resources on model development, algorithm innovation, and product creation rather than building annotation infrastructure. Cost efficiency emerges from providers’ economies of scale; specialized annotation companies can deliver services at lower per-unit costs than internal operations. Scalability flexibility enables rapidly expanding or contracting annotation capacity based on project needs without hiring and layoff cycles. Access to expertise provides domain specialists and annotation professionals that would be costly to hire and maintain internally.

Data annotation outsourcing services typically operate through several engagement models. Project-based outsourcing involves defined deliverables with specific datasets, quality standards, and timelines. This model works well for one-time annotation needs or proof-of-concept projects. Managed services provide ongoing annotation support with dedicated teams, established processes, and continuous quality improvement. This approach suits organizations with sustained annotation requirements.

Hybrid models combine internal and external annotation capacity. Companies may handle sensitive or highly specialized annotation internally while outsourcing higher-volume, more standardized tasks. This balanced approach optimizes for both control and efficiency.

When engaging data annotation outsourcing services, organizations must carefully manage several critical aspects. Data security and confidentiality require robust protections since training data often contains sensitive, proprietary, or personal information. Reputable providers implement encryption, access controls, security audits, and compliance with regulations like GDPR or HIPAA.

Quality assurance becomes paramount when outsourcing. Organizations should establish clear quality metrics, implement validation processes, and maintain oversight even when annotation occurs externally. Service level agreements (SLAs) should specify annotation accuracy targets, turnaround times, and remediation procedures for substandard work.

Intellectual property considerations require clear contractual terms defining data ownership, usage rights, and confidentiality obligations. Annotation guidelines and schemas may themselves represent valuable intellectual property requiring protection.

The geographic distribution of data annotation outsourcing services spans globally. Providers operate in regions with educated workforces, strong language skills, and cost advantages. Different regions excel in different annotation types—some specialize in image annotation for autonomous vehicles, others in text annotation for NLP applications, and still others in specialized domains like medical imaging.

Nearshore versus offshore outsourcing presents different tradeoffs. Nearshore providers offer time zone alignment, cultural similarity, and easier collaboration but typically at higher costs. Offshore providers deliver cost advantages and large workforce availability but may face communication challenges and greater time zone differences.

The future of data annotation outsourcing services points toward increased integration with AI development workflows. Providers are developing tighter API connections, more sophisticated project management tools, and better visibility into annotation progress and quality. The line between annotation services and AI development platforms continues to blur as providers add model training, validation, and deployment capabilities.

Conclusion

Data annotation stands as a foundational element of modern artificial intelligence, transforming raw data into the training fuel that powers intelligent systems. From large language models that engage in sophisticated dialogue to autonomous vehicles navigating city streets, from medical diagnostics that detect diseases early to recommendation systems that personalize our digital experiences, data annotation makes it all possible.

The industry has matured significantly, evolving from ad hoc crowdsourcing to sophisticated, professionalized services with established standards and best practices. Whether considering data annotation jobs, evaluating data annotation services for your organization, or simply understanding what makes AI systems work, appreciating the human intelligence and careful judgment embedded in training data is essential.

As AI continues its rapid advancement, the importance of high-quality data annotation will only grow. The humans who label data, the platforms and tools that facilitate the process, and the companies that provide annotation services collectively enable the AI revolution. Understanding data annotation—its types, technologies, legitimate providers, and career opportunities—offers valuable insight into how artificial intelligence actually comes to life, one carefully placed label at a time.

Partner with Connect2BPO for Expert Data Annotation Services

Building world-class AI systems requires more than just sophisticated algorithms; it demands high-quality, accurately annotated training data. At Connect2BPO, we specialize in delivering professional data annotation services that power the next generation of artificial intelligence applications.

Don’t let data annotation become a bottleneck in your AI development roadmap. Connect2BPO data annotation outsourcing services can support your project goals. Our team of annotation specialists is ready to help you transform raw data into the high-quality training datasets that will power your AI innovation.

Contact Connect2BPO now to learn more about our data annotation services and discover how we can become your trusted partner in AI development.